🤖 AI Coders Are Coming For You

Global code-hosting powerhouse GitHub released a report on the economic impact of AI-powered coding assistants – notably their own product Copilot. Aside from the back-of-a-napkin calculation and claim that systems like Copilot will add a staggering $1.5 trillion to the global economy by 2030, the report does have some fascinating data on user satisfaction and individual programmer’s productivity: Users accepted a solid 30% of code suggestions (a number which surely only will go up as the systems become more robust over time). GitHub’s researchers found, not surprisingly, that Copilot helps less experienced developers more – pointing toward a future in which more people will be able to solve their code-related challenges. Here’s the paper and a recording of a talk discussing the results.

💥 Bing! Microsoft Copilot lands

I don’t think one can overstate the soon-to-be omnipresent Microsoft Copilot’s disruptive potential in some of the world’s most used software, Microsoft Office. After integrating Copilot into the Bing search engine (on its Edge browser), the Redmond-based software giant started rolling out deeper integrations into Windows and soon Office. Prepare yourself for AI-generated emails via Outlook, reports and proposals via Word, financial calculations via Excel, and – gasp – slide decks created by Copilot inside Powerpoint. A brave new world open to most white-collar workers in the world. It turns out – it still pays to own the operating system…

💬 Prompts Suck

You almost certainly have crafted a prompt for ChatGPT or another LLM lately. Did it frustrate you? Were you baffled at how complex and long some of the “best practice” prompts you can find online are? Or did you get little more than garbage in return (yet confidently presented as truth)? There is a reason why prompt engineering is an actual job (although one which, I bet, will have a very short shelf life). The future for most LLMs will, almost certainly, be different input modalities – buttons, controls, touch surfaces – instead of an ambiguous text prompt. Stanford’s Varun Shenoy has some concise thoughts about this.

💼 The Size of Firms and the Nature of Innovation

Think income inequality in countries is bad (and getting worse)? Take a look at firm concentration: According to a recent study on “100 Years of Rising Corporate Concentration,” the top 1% of firms in the US raked in 80% of all sales. A massive disparity that is increasing over time – another core driver of what we have called “The Great Bifurcation.” Firms either orient themselves towards fully vertically integrated “super-firms” or focus on specific niche markets with a high level of modularity (i.e., most components of their value chain are outsourced). With this comes a shift in innovation potential – a recent meta-analysis found that the larger the firm, the more incremental and less disruptive innovation happens inside the firm. In essence: Startups disrupt, and incumbents innovate.

🏢 The effects of COVID-induced WFH-policies will be felt for a long time to come

Economic analysis firm Capital Economics is out with a new report stating that office values will not regain their pre-pandemic peak prices until well into 2040. Fortune’s write-up provides further details. For example, 56% of surveyed firms have adopted a hybrid work model, and key swipes (an indicator of an office’s utilization) are down 50% from early 2020 levels. As much as this hits commercial real estate investors, it has trickle-on effects on many other infrastructural pieces – from restaurants to commuter traffic. Ever more reasons to rethink urban and suburban living in a new world.

💅 Brands and Influencers Go Virtual

What do luxury-good-empire LVMH, NIKE, and Epic Games have in common? They all collaborate to bring offline brands into virtual worlds. Epic Games has cornered the virtual world market with its blockbuster title Fortnite and the ever-growing Unreal Engine development tool ecosystem – and brands are eager to play. Meanwhile, virtual influencers such as Miquela, with her 2.8 million followers on Instagram, or Lu do Magalu, clocking in at 6.3 million Instagram fans, are competing for eyeballs and attention from real-world celebrities. Talking of which – real-world celebrities are rushing to create (and monetize) virtual clones of themselves. Up next? Consumers replacing their personal attention with AIs consuming and interacting with all the synthetic brand and celebrity content. “Have your AI talk to my AI.” will become the default.

🤯 Even Google Itself Doesn’t Trust Its Own AI

The company warned its employees not to use Bard (its LLM) with any sensitive data as it might leak this data in future interactions with other users. To add insult to injury, Google also warned its developers that code generated by Bard might be buggy and/or bloated, and the cost of fixing the code might be higher than the assumed cost savings of using Bard in the first place — brave new world.

😨 Some People in the HackerNews Community Exhibit Existential AI Angst

HackerNews, the news bulletin and community by startup accelerator program and investment fund Y Combinator, is somewhat the de-facto place for startup founders and tech talent to come together and discuss. A recent (anonymous) post by a software engineer on his fear of AI destroying his career (and thus financial security) stoked nearly 200 comments on the impeding AI apocalypse (or not).

😯 Surprise, Surprise: Startups, Not Incumbents, Drive Commercialization of High-Impact Innovations

In a recent paper, Kolev et al. show the growing importance startups play in commercializing high-impact (and oftentimes disruptive) innovations. The reason is simple:

Startups have more incentive than incumbent firms to engage in potentially disruptive R&D because large, established firms have more to lose from the discovery of new technologies that replace traditional ways of doing things. With no existing operations, startups have nothing to lose and much to gain from disruptive innovation. (via NBER)

Lessons for incumbents: Become good at partnering with startups.

🛍️ Direct-to-Consumer brands are going direct(er) – by manufacturing themselves

DTC has been a massive trend over the last couple of years and one which accelerated the great bifurcation of consumer markets (our Hourglass Economics model). Now the companies are starting to vertically integrate in an effort to better control quality and increase profits – which should prove further trouble for the companies stuck in the fat middle of “value for money” (or maybe more accurately, “a blend of bland, chill, premium mediocre).

🌏 Globalization changes shape but doesn’t reverse

In a recent paper by Thun et al., the research team introduced the concept of “massive modularity” – a form of economic coordination that accommodates complexity at scale and is a signature feature of multilayered component ecosystems underlying the ICT hardware industry (and arguably many others). Modularity, or in be radical’s verbiage, “stacks,” is a core enabler for companies to operate efficiently and effectively in increasingly bifurcated markets – small, nimble, niche players at the top, and large, vertically-integrated companies at the bottom and middle of the market. Massive modularity also means that processes are increasingly hard to decouple and reshore – a counter-indicator to the growing near-shoring narrative.

🚕 UK rental car service delivers cars via remote control

Getting to Ithaca, the fabled destination of the long journey of Odysseus, was never the point. Similar to the famous story of the trials and tribulations of our Greek hero, in technology we oftentimes fixate on the end state and ignore the journey. Point in case: Self-driving cars. We might well be decades away from truly fully autonomous vehicles – waiting for this end state might prove to be costly. Yet, on the glide path toward full autonomy we develop highly reliable remote controls for vehicles. Turns out – that is good enough to solve many consumer pain points. Fetch, a UK-based rental car company turns this idea into reality by delivering your car to your doorsteps via human-operated remote control.

🤖 Chirper.ai is the first social media for our AI overlords – no humans allowed.

It looks like Twitter, it feels like Twitter, and it has accounts posting and commenting – yet something is different: Every single account on Chirper.ai is an AI. The way it works is that users (actual humans) set up AI accounts, give them a persona complete with interests and character quirks, and let them start posting. The AI generates posts, including hashtags – which other AI bots start commenting on. It’s a fascinating view into how fully autonomous cyborg societies might work. Here is a good summary of how it works and what’s going on in Chirper-land.

🧑💻 Stack Overflow Moderators Go On Strike

Open source AI community Hugging Face released a visual summary of the “Can AI Code?” challenge – and the results look promising. Given software’s inherent logic and structure, it is a prime candidate for generative AI to do its magic. But every coin has two sides. Coding Q&A site Stack Overflow (which has become the de-facto standard for developers to look things up) recently issued a near-total prohibition on moderating AI-generated content – and the moderators are unhappy. Their main point – due to the fact that LLMs like GPT 4 have a tendency to “hallucinate” – is that the site is being flooded with inaccurate information. Take this as the harbinger of things to come – we will see more and more content being generated by generative AI and more and more content being simply inaccurate.

👋 Hello AI, Goodbye Search

In the beginning, there was search.

In 1997, serial entrepreneur, and investor Bill Gross, launched the GoTo.com search engine out of his proto-startup incubator Idealab. Competing with then-heavyweights such as Lycos and Excite, Gross’ big idea had its moment at the TED conference the following February: Gross introduced paid advertisement next to search results, tied to a user’s search query, and sold through an auction bidding mechanism.

Two and a half years later, in October 2000, the up-and-coming search company from Mountain View, California, Google, launched a refined version of Gross’ innovation and called it “AdWords.”

The rest became history.

18 years later, OpenAI publicly launched the first version of GPT (Generative Pre-trained Transformer). Within four short years, we have moved through numerous iterations, with OpenAI launching ChatGPT in November 2022.

And this is where the world of search advertising starts spinning out of control.

And then there came AI…

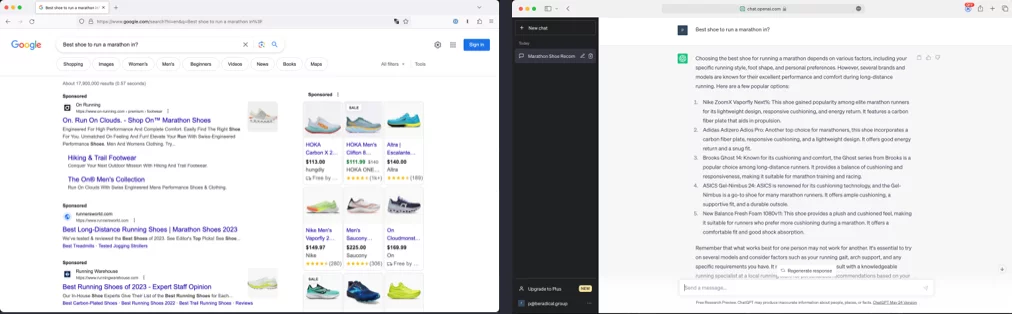

Try a search for “best shoes to run a marathon in,” and Google welcomes you with sponsored listing after sponsored listing. As a matter of fact, on Google’s US site, every single link and placement above the fold (i.e., in the visible area of the screen) is an ad. Scroll down, and you will find a near-endless number of search engine-optimized listicles linking to affiliate marketing programs.

Execute the same query on ChatGPT. After a brief moment to “think,” the little typing cursor reveals a list of five popular options (spanning five brands), including the specific benefits of each model. ChatGPT closes its suggestions with a recommendation to try different shoes on and encourages you to visit a knowledgeable local running shop.

The latter interaction is so obviously better that it makes you question why you would ever consider asking Google Search anything ever again. It is the same feeling people had when they experienced Google Search for the first time in the late 90s/early 00s. And the rest became history.

And things become weird…

Consider that your average online store gets around half of its traffic from searches. Most media publications get (much to their chagrin) an equal amount of traffic from the same source. The discovery of new apps, websites, products, and services is driven by search engine traffic.

It is not hard to imagine that we will experience a mass migration of users’ questions away from Google Search (and its brethren) to AIs such as ChatGPT. It is entirely unclear how (at least in the beginning – longer term, we almost certainly are guaranteed the enshittification of AI) how this will play out – the most likely scenario is that oodles of online stores will become financially unsustainable. We will see a further acceleration of the concentration of purchases into players with strong, established brands.

The quirky new marathon shoes that received rave reviews from some early adopters and hardcore runners? ChatGPT might not think that they deserve mention in its response to your question…